Comparison of MLP and CNN in Image Classification: Fall 2020

Summary

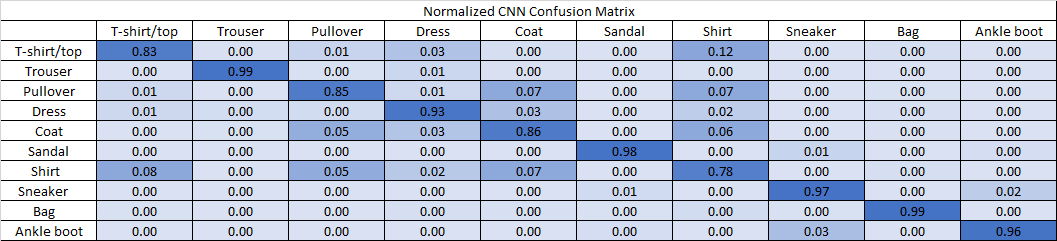

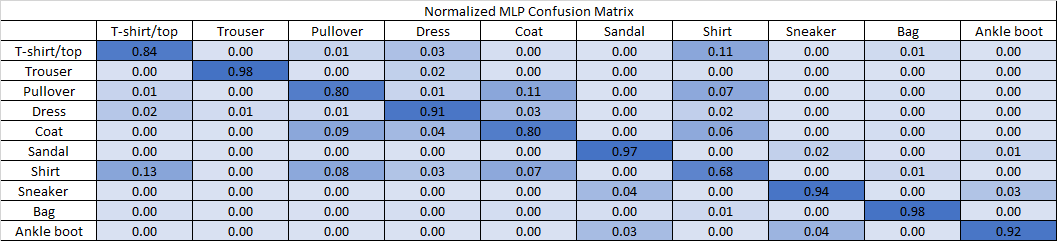

In this project, I developed an optimal Multilayer Perceptron (MLP) and Convolutional Neural Network (CNN) through hyperparameter optimization and compared their performance in classifying images from the Fashion-MNIST dataset. Since accuracy is not a comprehensive measure of performance, I evaluated the two classifiers using a combination of several performance metrics, in addition to 10-fold cross validation. I measured the relative performance of the two neural networks using performance metrics of precision, recall, f1-score, Cohen’s Kappa, Matthews Correlation Coefficient, and Cross-Entropy. This resulted in a thorough comparison wherein the two neural networks’ per-class performance and overall performance were evaluated. Of the two neural networks, CNN consistently performed better than MLP across all metrics. Figures 1 and 2 show the confusion matrices of the neural networks. Please refer to the report for a more in-depth discussion of the project.

Figure 1: Normalized Confusion Matrix for the Optimized CNN

Figure 2: Normalized Confusion Matrix for the Optimized MLP

Although these results were not surprising, this was my first machine learning project, and I learned many fundamental skills. I gained experience in using the Tensorflow python library to construct neural networks, the Keras Tuner library to optimize hyperparameters, and the Scikit-learn library to evaluate classifiers. I also learned how to fully use data sets and avoid over-fitting using k-fold cross validation, and how to effectively compare machine learning algorithms using a myriad of performance metrics.